On Monday, Meta declared that it will take more action against accounts who share “unoriginal” content on Facebook—that is, accounts that frequently use someone else’s words, images, or videos.

According to Meta, it has already removed almost 10 million profiles this year that were pretending to be well-known content producers.

Additionally, 500,000 accounts that participated in “spammy behavior or fake engagement” have been targeted.

To stop the accounts from making money, these measures have included things like lowering the dissemination of the accounts’ material and demoting their comments.

Meta’s update comes only days after YouTube said that it was also elaborating on its policy regarding unoriginal content, which includes repetitious and mass-produced videos that are now simpler to create because to Al technology.

Similar to YouTube, Meta claims that users that interact with other people’s content—such as by creating reaction videos, following trends, or contributing their own perspectives—will not be penalized.

Rather, Meta focuses on repeating stuff from other people, either on spam accounts or accounts that pose as the original creator.

According to the business, accounts that persistently reuse content from other people will be banned from Facebook’s monetization schemes and have their posts distributed less widely.

In order to guarantee that the original creator receives the views and credit, Facebook will also limit the dissemination of duplicate videos when it finds them.

The business also announced that it is piloting a mechanism that links to the original content on duplicate videos.

Image Credits:Meta

Image Credits:Meta

The update comes as Meta faces backlash from users on Instagram and other platforms for the overzealous, automated enforcement of its policies.

Nearly 30,000 people have signed a petition calling on Meta to address the problem of accounts that have been mistakenly disabled and its lack of human support, which has left users feeling abandoned and harmed numerous small enterprises.

Even though the media and other well-known creators have taken notice of the problem, Meta has not yet officially addressed it.

Unoriginal content is becoming a bigger problem, even if Meta’s most recent crackdown is mostly targeting accounts that profit from content theft.

Al slop, a word used to describe low-quality media content created by generative Al, has proliferated across platforms since the development of Al technology.

For example, text-to-video Al tools on YouTube make it simple to identify an Al voice layered on images, videos, or other repurposed content.

Although it looks like Meta’s change solely addresses repurposed content, its post implies that it might also be taking Al slop into account.

Meta states in a section where it provides “tips” for producing unique content that producers should concentrate on “authentic storytelling,” not brief videos with little substance, and not just “stitching together clips” or adding their watermark when utilizing content from other sources.

Without explicitly stating it, Al tools have also made it simpler to create these kinds of unoriginal videos, since low-quality videos sometimes consist of a sequence of only images or clips (actual or Al) with additional Al narration.

As a longstanding rule, Meta cautions creators in the post against reusing information from other apps or sites.

Additionally, it states that video captions must to be of a high caliber, which can entail reducing the usage of automatically generated Al captions that haven’t been updated by the producer.

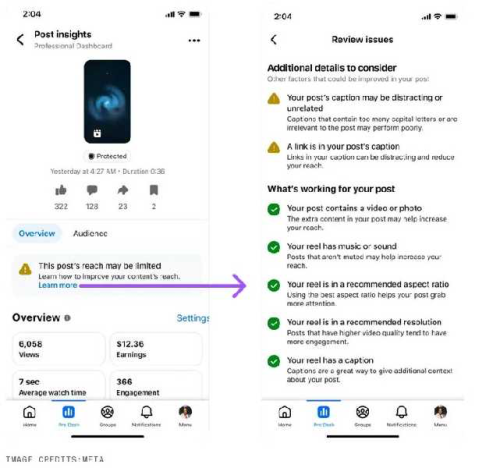

Image Credits:Meta

Image Credits:Meta

According to Meta, these modifications will be implemented gradually over the coming months to give Facebook developers time to get used to them.

Creators may access the new post-level analytics in Facebook’s Professional Dashboard to determine why they believe their content isn’t getting shared.

From the main menu of their Page or professional profile, creators can now check the Support home screen to discover if they are subject to monetization fines or content recommendation.

In its quarterly Transparency Reports, Meta usually provides details about the items it removes. According to Meta, 1 billion phony accounts were removed between January and March 2025, and 3% of its monthly active users worldwide were fraudulent accounts in the most recent quarter.

Like X, Meta has recently retreated from factchecking content itself in favor of Community Notes in the U.S., which let users and contributors assess whether posts are correct and adhere to Meta’s Community Standards.

Source: Tech Crunch